In 2019 I had the great fortune to attend the Zêzere Arts Festival in Portugal, where the festival choir performed my composition Sea Swell twice, in two quite incredible venues. After many Covid related delays, I’m happy to say that the 360 video of the performance is now finally finished and can be seen below on YouTube.

Sea Swell was my first choral composition, which I wrote many years ago in 2007 while working on my PhD in Trinity College Dublin. The piece was commissioned by the Spatial Music Collective and New Dublin Voices choir, and was first performed by New Dublin Voices in Trinity Chapel, in January 2008.

The initial inspiration for this work came from some experiments with granulated white noise. When a very large grain duration was employed the granulation algorithm produced a rolling noise texture which rose and fell in a somewhat irregular fashion and was highly reminiscent of the sound of breaking waves. This noise texture was originally intended to accompany the choir, however in practice this proved to be unnecessary as the choir could easily produce hissing, noise textures which achieved the same effect.

The choir is divided into four SATB groups positioned symmetrically around the audience and facing a central conductor. The piece utilizes many of Henry Brant’s ideas, albeit with a much less atonal harmonic language. In particular, spatial separation is used to clarify and distinguish individual lines within the extremely dense, sixteen part harmony. If this chord was produced from a single spatial location, it would collapse into a dense and largely static timbre. However, this problem is significantly reduced when the individual voices are spatially distributed. Certain individual voices are further highlighted through offset rhythmic pulses and sustained notes.

As exact rhythmic coordination is difficult to achieve when the musicians are spatially separated and hence a slow, regular tempo is used throughout. The beat is further delineated through the use of alliteration in the text as the unavoidable sibilant sounds mark the rhythmic pulse. These sibilant sounds are also used in isolation at the beginning of the piece, to create a wash of noise-like timbres which mimic the sounds of crashing waves suggested by the title. In the opening section, each singer produces a continuous unpitched “sssssssssss” sound which follows a given dynamic pattern. The angled lines in the score indicate the dynamics which are divided into three levels, below the bar line indicating fading to or from silence, above the bar line indicating maximum loudness and on the bar line indicating a medium level. The note durations are provided to indicate durations for the dynamic changes and do not indicate pitch. Instead a continuous “ssssss” sound is produced for each entire phrase.

Brant used the term spill to describe the effect of overlapping material produced by spatially distributed groups of musicians. However, Brant was also aware of the perceptual limitations of this effect and he noted that precise spatial trajectories became harder to determine as the complexity and density of the spatial scene increases. The opening of this piece will contain a significant degree of spill due to the common tonality of each part and will thus be perceived as a very large and diffuse texture. Large numbers of these sibilant phrases are overlapped in an irregular fashion to deliberately disguise the individual voices and create a very large, spatially complex timbre of rolling noise-like waves. The score of this opening section can be seen below.

The lyrics consist of some fragments of poetry by Pablo Neruda as well as some original lines of my own.

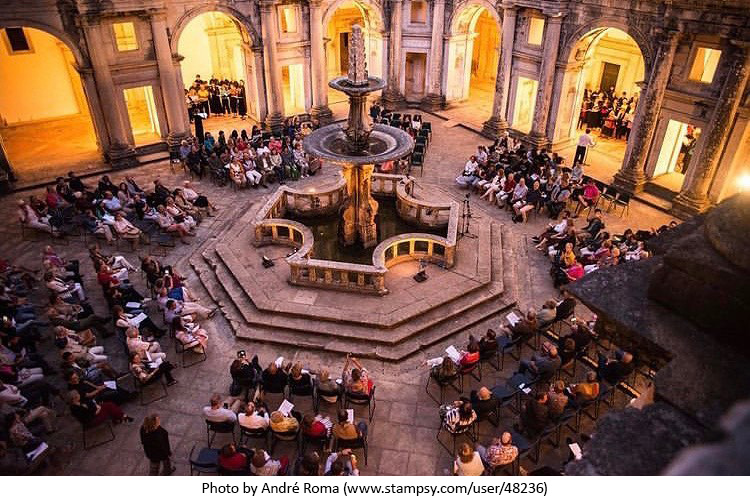

The piece was performed twice by the Zêzere Arts Festival choir, conducted by the wonderful Aoife Hiney, in two quite spectacular venues, namely the Convento de Cristo, Tomar (pictured above), and the Batalha Monastery. Both locations were very well suited to spatial performances, and so the concerts also featured some other well known works of spatial music, most notably Tallis’ Spem in Alium (which was the subject of some previous posts). For the final 360 video I therefore decided to combine both performances in a single video, with a transition from the Convento de Cristo to the Batalha Monastery occurring approximately halfway through the piece.

The 360 video was filmed using the same Go Pro Omni camera used in previous videos in this series (and discussed in more detail in previous posts). For the audio, a Sennheiser Ambeo 1st order Ambisonics microphone was used to record both concerts and positioned just below the 360 camera. Unfortunately the recording from the first concert proved unusable due to excessive wind noise (note to self, don’t forget to bring your wind shield to the actual concert!!). Fortunately however, Gerard Lardner, a singer in the choir and a fellow spatial audio enthusiast had a Zoom H3-VR microphone and recorder (with windshield) and so this recording was used instead for the first portion of the video.

The opening section of largely high frequency sibilant sounds proved quite revealing in terms of some of the limitations of Ambisonics, and particularly standard 1st Order Ambisonic (FOA) microphones. Although FOA mics are a very efficient and compact approach to spatial audio recording, there are some trade-offs, most significantly in this case in terms of the directional accuracy of high frequencies. In the venues itself, the direction of the sibilance produced by each choir was quite clear, and in the opening section this produced a clear sense of a wash of sound spreading around the space. In the FOA recording however (with either microphone) the directional accuracy was far less pronounced in this section, although later sections with more traditional vocal sounds was far better.

Although it was not practically possible at the time, this issue could have been addressed to some extent using alternative microphone arrangements. A number of Higher Order Ambisonic (HOA) microphones have become available in recent years, such as the Coresound Octomic (2nd order), Zylia ZM-1 (3rd order), or the MH Acoustics Eigenmike (4th order). Although these require more channels of audio to be recorded, and HOA in general is not supported by all 360 video platforms, increasing the order has been shown to produce noticeable improvements in directional accuracy. Alternatively, entirely different, non-Ambisonic microphone arrays can also be used, and then later encoded into Ambisonics before being attached to the 360 video. An example of this approach using 8 cardioid microphones in an octagonal arrangement known as the Equal Segment Microphone Array (ESMA), was discussed in a previous post. Although this approach does offer some advantages and is preferred by some producers, it is not always practical particularly when the space available to setup the microphones is limited.

For this particular recording therefore, FOA microphone recordings were required and provided reasonable directionality for the majority of the piece, apart from this opening section. However, some small improvements were possible using some post-production techniques and converting the FOA recordings to 3rd order in a couple of different ways. This was achieved using the wonderful Harpex plugin which uses some sophisticated signal processing to process spatial audio signals of different types. Here, Harpex was first used to upmix the entire FOA mix to 3rd Order Ambisonics (TOA) which resulted in a small but noticeable improvement in directional accuracy throughout the recording. In addition for the opening section discussed above, Harpex was also used to synthesize four highly directional shotgun microphones pointed in the direction of the four choirs. These signals were then re-encoded into TOA and blended back into the overall mix, in an attempt to improve the directional accuracy of the sibilant material in this opening section. Overall this resulted in small improvements overall, however, as YouTube still only supports FOA, this new version with the TOA audio mix can only be played locally using VLC player or the excellent VIVE cinema app. In theory Facebook also supports 360 video with TOA audio, however, in practice this is not always so simple. For example it seems as if 360 videos are supported on personal Facebook accounts, but not always on pages. However, if recent reports about Google’s efforts to develop an open, royalty-free media format are true, then perhaps support for HOA may finally become available on YouTube.

I would like to express my heartfelt thanks to all the singers of the Zêzere Arts Festival choir, and in particular to Aoife Hiney, Brian MacKay for the invitation to the festival. If you are singer or instrumentalist, whether young or old, amateur or professional, then I simply cannot recommend the festival enough. Its combination of masterclasses, courses, workshops, collaborations with composers and artists, and of course performances is quite unique and offers an incredible career development experience in a truly wonderful location. More information on next years festival and how to apply can be found on their website, here.

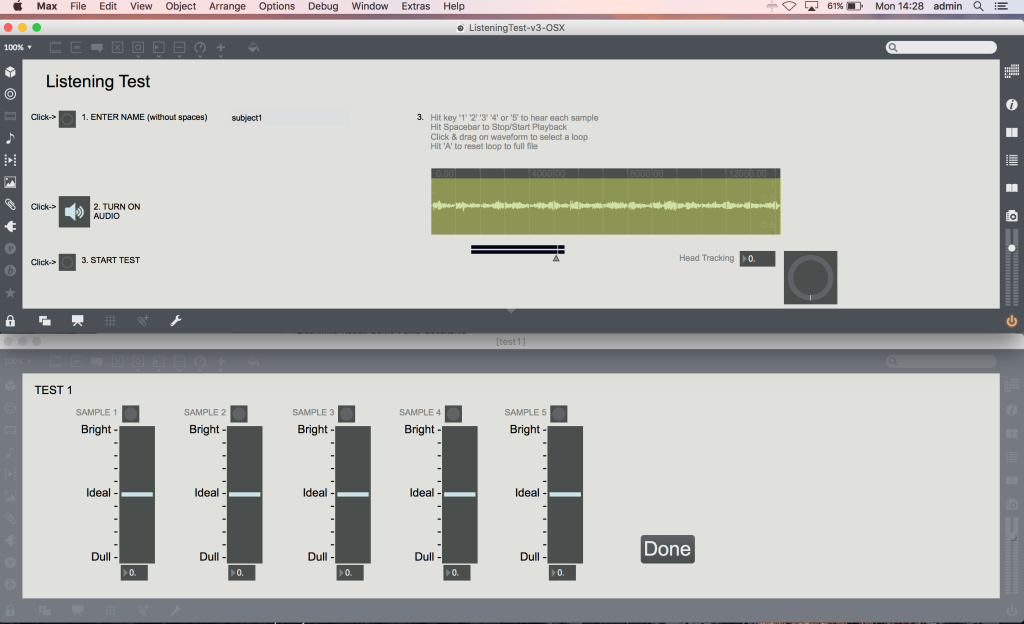

To investigate the subjective timbral quality of each microphone, a modified MUSHRA test was again implemented. However, as no reference recording is available for this particular experiment, all test stimuli are presented to the listener at the same time without a reference.

To investigate the subjective timbral quality of each microphone, a modified MUSHRA test was again implemented. However, as no reference recording is available for this particular experiment, all test stimuli are presented to the listener at the same time without a reference.