The first 360 video from the concert is now online, and in a nice piece of timing, Youtube have just released a new Android app that can playback 360 videos with spatial audio. So, if you happen to own a high spec Android smartphone like a Samsung Galaxy or Nexus (with Android v4.2 or higher), you can watch this video using a VR headset like Cardboard with matching spatial audio on headphones. Desktop browsers like Chrome, Firefox, Opera (but not Safari), and the YouTube iOS app will only playback a fixed stereo soundtrack for now, but this feature will presumably be added to these platforms in the near future.

This recording is of the first movement of From Within, From Without, as performed by Pedro López López, Trinity Orchestra, and Cue Saxophone Quartet in the Exam Hall, Trinity College Dublin, April 8th, 2016. You can read more about the composition of this piece in an earlier post on this blog, and much gratitude to François Pitié for all his work on the video edit.

Apart from YouTube’s Android app, 360 video players that currently support matching 360 audio are thin on the ground, at least for now. Samsung’s Gear VR platform supports spatial audio in a similar manner to YouTube, although only if you have a Samsung smartphone and VR headset. Facebook’s 360 video platform does not support 360 audio right now, however, the recent release of a free Spatial Audio Workstation for Facebook 360 suggests that this wont be the case for long. The Workstation was developed by Two Big Ears and includes audio plugins for various DAWs, a 360 video player which can be synchronised to a DAW for audio playback, and various other authoring tools for 360 audio and video (although only for OSX at the moment).

The mono video stitch was created using VideoStitch Studio 2 which worked ok, but struggled a little with the non-standard camera configuration. My colleague François Pitié is currently investigating alternative stitching techniques which may produce better results.

The spatial audio mix is a combination of a main ambisonic microphone and additional spot microphones, mixed into a four channel ambisonic audio file (B-format/ 1st order, ACN channel ordering, SN3D normalization), as per YouTube’s 360 audio specifications. As you can see in the video, we had three different ambisonic microphones to choose from, a MH Acoustics Eigenmike, a Core Sound TetraMic, and a Zoom H2n. We used the TetraMic in the end as this produced the best tonal quality with reasonably good spatial accuracy.

As might be expected given the distance of the microphones from the instruments, and the highly reverberant acoustic, all of the microphones produced quite spatially diffuse results, and the spot microphones were most certainly needed to really pull instruments into position. The Eigenmike was seriously considered as this microphone did produce the best results in terms of directionality (which is unsurprising given it’s more complicated design). However the tonal quality of the Eigenmike was noticeably inferior to the TetraMic, and as the spot mics could be used to add back some of this missing directionality, this proved to be the deciding factor in the end.

The Zoom H2n was in certain respects a back up to the other two microphones, as this inexpensive portable recorder can not really compete with dedicated microphones such as the TetraMic. However, despite its low cost it does work surprisingly well as a horizontal only ambisonic mic and was in fact used to capture the ambient sound in the opening part of the above video (our TetraMic picked up too much wind noise on that occasion so the right type of wind shield for this mic is strongly recommended for exterior recordings). While we used our own software to convert the raw four channel recording from the Zoom into a B-format, 1st order ambisonic audio file (this will be released as a plugin in the coming months), there is now a firmware update for the recorder that allows this format to be recorded directly from the device. This means you can record audio with the H2n, add it to your 360 video and upload it to YouTube without any further processing beyond adding some meta data (more on this below). So, although far from perfect (e.g. no vertical component in the recording), this is definitely the cheapest and easiest way to record spatial audio for 360 video.

The raw four channel TetraMic recording had to first be calibrated and then converted into a B-format ambisonic signal using the provided VVMic for TetraMic VST plugin. However, it should be noted that this B-format signal, like most ambisonic microphones (apart from the Zoom H2n), uses the traditional Furse-Malham channel order and normalization. So, it must be converted into the ACN, SN3D using another plugin such as the AmbiX converter, or Bruce Wiggins‘ plugin, as shown in the screen shot below.

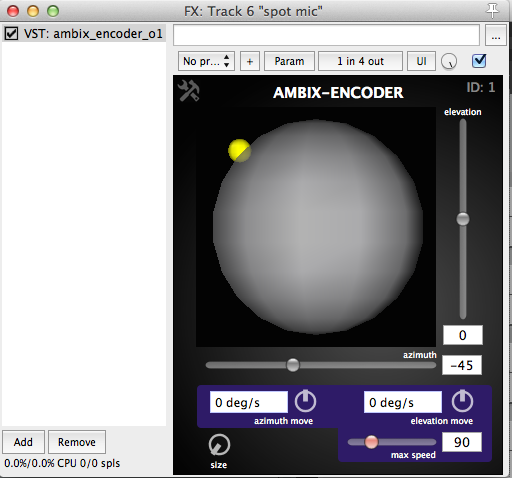

In addition to these ambisonic microphones positioned at the camera, we all also used a number of spot mics (AKG 414s), in particular for the brass and percussion on the sides and back of the hall. These were mixed into the main mic recording using an Ambi-X plugin to encode this mono recording into a four channel ambisonic audio signal and position it spatially, as shown below.

As these spot microphones were positioned closer to the instruments than the main microphone, and were directly mixed into this recording in this way, they provide much of the perceived directionality in the final mix, with the main microphone providing a more diffuse, room impression. This traditional close mics + room mic approach was needed in this particular case as it was a live performance with an audience and musicians distributed around the hall. However, this is quite different from how spatial audio recordings (such as for a 5.1 recording) are usually created. These types of recordings tend to emphasize the main microphone recording with minimal or even no use of spot microphones. However, to do this we have be able to place our main microphone arrangement quite close to the musicians (above the conductors head for example) so that we can capture a good balance of the direct signal (which gives us a sense of direction), and the diffuse room reverberation (which gives us a sense of spaciousness and envelopment). Often this is achieved by splitting the main microphone configuration into two arrangements, one positioned quite close to the musicians, and another further away. However, this is much harder to do using a single microphone such as the TetraMic, particularly when an audience is present and the musicians are distributed all around the room. This is one of the things we will be exploring in our next recording, which will be of a quartet (including such fine musicians as Kate Ellis, Nick Roth, and Lina Andonovska), and without an audience. This will allow us to position the musicians much closer to the central recording position and so capture a better sense of directionality using the main microphone, with less use of spot microphones.

Google have released a template project for the DAW Reaper which demonstrates how to do all of the above, and also includes a binaural decoder and rotation plugin that simulates the decoding performed by the Android app. For installation instructions and download links for the decoder presets and Reaper Template, see this link. This can also be implemented using the same plugins in Adobe Premiere, as shown here. Bruce Wiggins has a nice analysis of the Head Related Transfer Functions used to convert the ambisonic mix to a binaural mix for headphones on his blog which you can read here.

Finally, once you’ve created a four channel ambisonic audio file for your 360 video, you then need to add some metadata so YouTube knows that this is a spatial audio signal, as shown here. My colleague François Pitié describes how to do this on his blog, as well using the command line converter FFmpeg to combine the final 360 video and audio files. That article also demonstrates how to use FFmpeg to prepare a 360 video file for direct playback from an Android phone using Google’s Jump Inspector app.

Hi! great article! i have a problem though. When i import my wav (recorded with zoom nh2) file in the DAW the 3rd channel is mute. Like it didnt record anything. Am i missing something? could one of the mic be broken?

LikeLike

Hi Cristiano, no that’s actually correct. That 4 channel file is in the Ambi-X format so the four channels correspond to the W, Y, Z, and X components respectively. As the H2n is adapted for this type of thing, rather than being a true ambisonic microphone, it doesnt have any mics pointing up or down, and so doesnt have any vertical information in the Z channel. It does keep a dummy channel in there though so the file channel order is maintained and it will work correctly with other software, plugins, etc, but horizontal only of course.

LikeLike

Hi Enda, great article, if I need to split the 4 channel file Ambi-X format in to two files WY channels and ZX channels in order to use them in unity with GvrAudioSoundfield how is the procedure, thanks.

LikeLike

Hi Edgar, there’s a few options for that. The free utility WaveAgent can split a multi-channel file into multiple mono files. However, you can also do it directly in Reaper. Just right click on the 4 channel audio clip, go to Item Settings>Take Channel Mode: Stereo and then you can choose to only use a specific pair of channels from that clip. If you did that twice and selected channels 1&2 for the first clip, and channels 3&4 for the second copy of the clip, then you can render down the two stereo files from Reaper.

LikeLike